With release of GPT models, Vector databases are gaining huge popularity and are coming into limelight. Vector databases will power next generation AI applications and will serve as long term memory to LLM (large language model) based applications and workflows.

Why need Vector Databases?

In early days of internet, data was mostly structured data, which could easily be store and managed by relational databases. Relational databases were designed to store and search data in tables.

As the internet grew and evolved, unstructured data becomes so big in form of text, images, videos and became problematic to analyze, query and infer insight from the data. Unlike structured data, these are not easy to manage by relational databases. Imagine a scenario, where trying to search a similar shirt from collection of images of shirts, this would be impossible for relational databases purely from raw pixel values of shirt images.

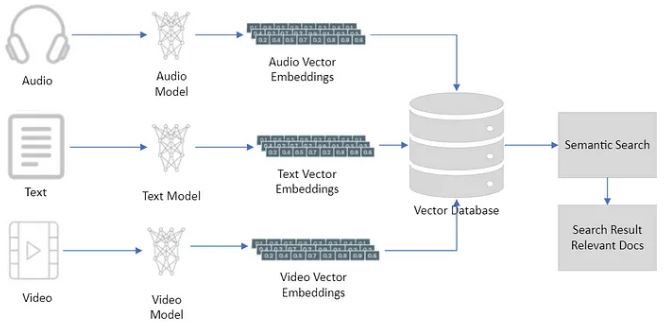

This brings us to vector databases. Unstructured data has led to a steady rise in the use of machine learning models trained to understand such data. word2vec, a natural language processing (NLP) algorithm which uses a neural network to learn word associations, is a well-known early example of this. The word2vec model is capable of turning single words into a list of floating-point values, or vectors. Due to the way models is trained, vectors which are close to each other represent words which are similar to each other, hence the term embedding vectors.

What is a Vector Database?

- A vector database is a type of database that stores, manage and search the data in form of vectors, also called embeddings.

- Vectors are nothing but a fancy term for arrays containing float numbers.

- Vectors are usually referred with their dimension. Dimensions can be thought of as number of elements in a float array. A 100-dimensional vector means an array containing 100 float numbers.

- In traditional databases, we are usually querying for rows in the database based on exact matches or predefined criteria.

- In Vector Databases, we apply a similarity metric to find a vector that is the most similar based on their semantic or contextual meaning.

- The vectors are generated by applying embedding function to the raw data, such as text, images, audio, video, and others. The embedding function can be based on various methods, such as machine learning models, word embeddings, feature extraction algorithms.

Few popular Vector databases for building AI apps:

- Pinecone: Popular vector database provider that offers a developer-friendly, fully managed, and easily scalable platform for building high-performance vector search applications. Recently raised $100 million in Series B funding.

- weaviate: A leading open-source vector database provider that enables users to store data objects and vector embeddings from their preferred machine-learning models and scale seamlessly, accommodating billions of data objects.

- milvus: A vector database solution designed for scalable similarity search, providing an open-source, highly scalable platform.

- chroma: An AI native open-source embeddings database. Using embeddings, Chroma lets developers add state and memory to their AI-enabled applications. A vector database solution provider that aims to democratize robust, safe, and aligned AI systems for developers and organizations of all sizes. Recently raised an $18M seed round.

- Vespa: A fully featured search engine and vector database. It supports vector search (ANN), lexical search, and search in structured data, all in the same query. Integrated machine-learned model inference allows you to apply AI to make sense of your data in real time. Open source or use cloud-based services.