Imagine being able to have an AI assistant that not only understands your requests but also has instant access to real-time, relevant information to answer them perfectly. That’s the promise of Retrieval-Augmented Generation (RAG), a cutting-edge approach that’s revolutionizing the way artificial intelligence interacts with the world.

So, what exactly is RAG? Unlike traditional large language models (LLMs) that rely solely on their internal knowledge base, RAG takes things a step further. It combines the power of LLMs with an external knowledge source, allowing them to access and leverage additional information on the fly. This means your AI assistant can tap into news articles, research papers, or even your company’s internal documents to provide more accurate, up-to-date, and contextually relevant responses.

Here’s how it works:

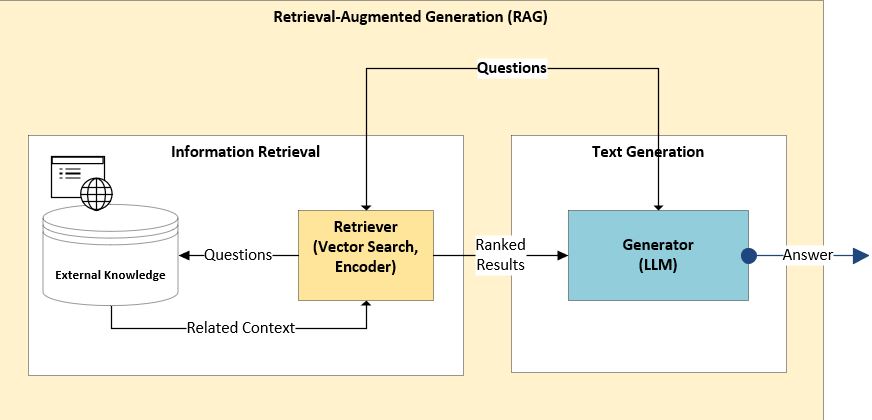

RAG consists of two main components: a Retriever and a Generator.

The retriever is responsible for finding and ranking the most relevant documents or passages that answer the user question, based on a similarity measure. The generator is responsible for producing a natural language response, based on the user question and the retrieved documents or passages.

The generator can be any pre-trained language model, such as GPT-4, that can generate text for various tasks, such as answering questions, translating languages, and completing sentences.

- Querying for Knowledge: When you ask a question, RAG first searches its external knowledge base using advanced semantic retrieval techniques. This is like searching a library with super-powered AI librarians who understand not just keywords but also the deeper meaning of your query.

- Augmenting the Prompt: The retrieved information, relevant to your question, is then combined with your original prompt. This creates a richer context for the LLM, giving it a deeper understanding of what you’re looking for.

- Generating the Response: With this enhanced understanding, the LLM leverages its language processing abilities to generate the best possible response. This could be an answer to your question, a creative text format like a poem or code, or even a translation tailored to your specific needs.

RAG has several advantages over using a standalone language model for chatbot development. Some of the benefits are:

The benefits of RAG are numerous:

- Increased Accuracy: By providing access to real-time information, RAG reduces the risk of outdated or inaccurate responses.

- Improved Context: The additional knowledge allows the LLM to understand the nuances of your request, leading to more relevant and helpful responses.

- Greater Flexibility: RAG can be adapted to various domains and tasks, making it a versatile tool for different applications.

- Transparency and Explainability: Some RAG models can even cite the sources they used, making their results more transparent and verifiable.

RAG is still evolving, but it holds immense potential for various applications, imagine chatbots that can answer your questions about current events, provide personalized recommendations, or even help with complex tasks by accessing relevant information on the fly.

As RAG continues to develop, it promises to break down the barriers between artificial intelligence and the real world. By seamlessly integrating external knowledge, RAG paves the way for AI that is not only intelligent but also truly informed, making it a valuable tool for individuals, businesses, and society as a whole.