Have you ever wondered how your phone understands your voice commands, or how chatbots manage to provide (somewhat) helpful responses? The answer lies in a fascinating field called Natural Language Processing (NLP). Think of NLP as the translator that bridges the gap between human language and the digital world. It allows machines to understand, analyze, and even generate human language, essentially teaching them to speak our tongue.

But how does this work? It’s not an easy feat! NLP cracks this code by employing a multi-step process:

Step-by-step process of NLP:

1. Text Preprocessing: The first step is getting the computer ready to understand the text. This involves tasks like:

-

- Sentence Segmentation: Breaking the piece of text in various sentences.

- Tokenization: Breaking down the sentence into individual units like words called as tokens.

- Normalization: Converting text to lowercase, removing punctuation, and handling typos.

- Stemming/Lemmatization: Reducing words to their base form (e.g., “running” to “run”).

2. Understanding the Meaning: Now comes the real magic! NLP uses various techniques to extract meaning from the preprocessed text:

-

- Part-of-Speech Tagging: Identifying the grammatical role of each word (e.g., noun, verb, adjective).

- Named Entity Recognition: Identifying and classifying named entities like people, organizations, and locations.

- Dependency Parsing: Understanding the relationships between words in a sentence.

3. Going Beyond the Surface: NLP doesn’t just understand individual words; it grasps the bigger picture:

-

- Word Embeddings: Representing words as numerical vectors, capturing their meaning and relationships to other words.

- Sentiment Analysis: Determining the emotional tone of a text (e.g., positive, negative, neutral).

- Topic Modeling: Discovering the main themes and topics discussed in a piece of text.

4. Generating Language: Not just understanding, but also creating! NLP can:

-

- Machine Translation: Translating text from one language to another while preserving meaning and nuance.

- Text Summarization: Condensing a text into a shorter version while capturing its key points.

- Chatbots and Virtual Assistants: Generating human-like responses to user queries.

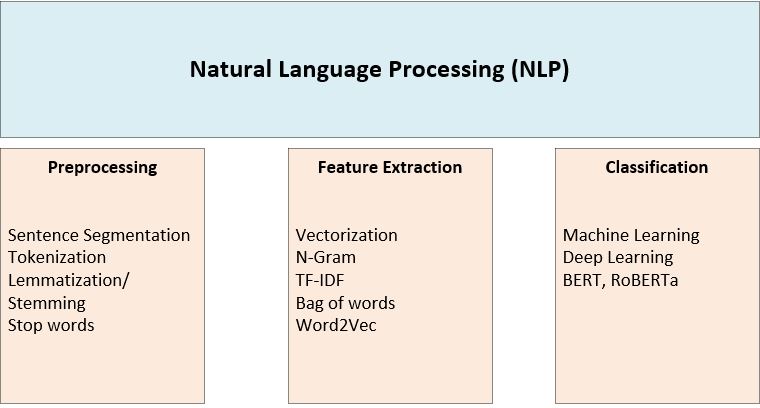

We’ve explored the basics of NLP, let’s dive little deeper into technical aspects of NLP and understand the different techniques evolved during time:

Advanced techniques used for NLP:

- Word Embeddings: Capturing Meaning Beyond Words: One of the fundamental challenges in NLP is representing words numerically. Traditional one-hot encodings fail to capture the semantic relationships between words. Enter word embeddings, like Word2Vec and GloVe, which map words into a high-dimensional space where semantically similar words are positioned closer. This allows models to understand nuances and context beyond just individual words.

- Recurrent Neural Networks (RNNs): Understanding Sequence: Language is inherently sequential, with words strung together to form sentences with meaning. RNNs, like LSTMs and GRUs, are specifically designed to handle this sequential nature. They process each word in context, remembering information from previous words, making them ideal for tasks like sentiment analysis and machine translation.

- Attention Mechanisms: Focusing on What Matters: LSTMs are powerful, but they struggle with long sequences. Attention mechanisms address this by allowing the model to focus on specific parts of the input sequence that are most relevant to the current task. This enables better understanding of long sentences and complex relationships between words.

- Deep Learning Architectures: Combining the Best of Both Worlds: Modern NLP models leverage powerful deep learning architectures like Transformers and BERT. These architectures combine word embeddings, RNNs, and attention mechanisms to achieve state-of-the-art performance in various NLP tasks. They can process entire sentences simultaneously, capturing rich contextual information for tasks like text summarization and question answering.

Despite its advancements, NLP still faces challenges like bias, explainability, and handling low-resource languages. Ongoing research focuses on:

- Addressing bias: Identifying and mitigating biases present in datasets and models.

- Improving explainability: Making models more transparent in their decision-making process.

- Adapting to diverse languages: Developing techniques for languages with limited data resources.

Real-world application of NLP:

- Smarter search engines: Say goodbye to irrelevant results! NLP helps them understand your queries better, leading to more relevant searches.

- Chatbots that (almost) understand you: No more robotic responses – NLP allows them to anticipate your needs and respond naturally.

- Accurate translations: Forget literal, clunky translations. NLP captures the nuances of language for natural-sounding translations across cultures.

- Personalized experiences: From music recommendations to targeted ads, NLP tailors content to your individual preferences.

- Voice assistants that evolve: Imagine assistants that hold meaningful conversations, write personalized poems, or translate languages in real-time. NLP is paving the way!

The future of NLP is bright, with continuous advancements opening new doors. We can expect even more complex models, personalized language interactions, and seamless integration into our daily lives. As technology evolves, NLP will continue to bridge the gap between human language and the digital world, shaping the future of communication and interaction.