Have you ever wondered how your phone understands your voice commands, or how chatbots manage to provide (somewhat) helpful responses? The answer lies in a fascinating field called Natural Language Processing (NLP). Think of NLP as the translator that bridges the gap between human language and the digital world. It allows machines to understand, analyze, and even generate human language, essentially teaching them to speak our tongue.

But how does this work? It’s not an easy feat! It’s a complex field with many sub-areas, but here’s a high-level overview:

1. Core Tasks & Concepts:

- Tokenization:

- Definition: Breaking down text into individual units (tokens), which are usually words or punctuation marks.

- Example:

- Input: “The cat sat on the mat.”

- Output: [“The”, “cat”, “sat”, “on”, “the”, “mat”, “.”]

- Tools: NLTK, spaCy, transformers tokenizers

- Part-of-Speech (POS) Tagging:

- Definition: Identifying the grammatical role of each word (noun, verb, adjective, etc.).

- Example:

- Input: “The cat sat on the mat.”

- Output: [(“The”, “DT”), (“cat”, “NN”), (“sat”, “VBD”), (“on”, “IN”), (“the”, “DT”), (“mat”, “NN”), (“.”, “.”)] (DT=determiner, NN=noun, VBD=verb, IN=preposition)

- Tools: NLTK, spaCy, transformers

- Named Entity Recognition (NER):

- Definition: Identifying and classifying named entities in text (people, organizations, locations, dates, etc.).

- Example:

- Input: “Apple is planning to open a new store in London.”

- Output: [(“Apple”, “ORG”), (“London”, “GPE”)] (ORG=Organization, GPE=Geopolitical Entity)

- Tools: NLTK, spaCy, transformers

- Lemmatization/Stemming:

- Definition: Reducing words to their base or root form.

- Lemmatization: Reducing to the dictionary form (lemma) while considering the word’s meaning and context. More accurate but computationally expensive. For example, “better” becomes “good”.

- Stemming: A simpler, faster process that chops off prefixes and suffixes. Less accurate. For example, “running” becomes “run”.

- Example:

- Input: “running, runs, ran”

- Lemmatization Output: “run, run, run”

- Stemming Output: “run, run, ran” (might not always be correct)

- Tools: NLTK, spaCy

- Parsing (Syntactic Analysis):

- Definition: Analyzing the grammatical structure of a sentence.

- Output: Often represented as a parse tree showing the relationships between words and phrases.

- Example: (Simplified)

Sentence

-

- Tools: NLTK, spaCy

- Semantic Analysis:

- Definition: Understanding the meaning of words, phrases, and sentences.

- Tasks:

- Word Sense Disambiguation: Determining the correct meaning of a word based on its context (e.g., “bank” as a financial institution vs. “bank” as the side of a river).

- Relationship Extraction: Identifying relationships between entities in text.

- Techniques: Word embeddings, knowledge graphs.

- Sentiment Analysis:

- Definition: Determining the emotional tone or attitude expressed in a text (positive, negative, neutral).

- Techniques: Lexicon-based approaches, machine learning classifiers, deep learning models.

- Topic Modeling:

- Definition: Discovering the main topics or themes in a collection of documents.

- Techniques: Latent Dirichlet Allocation (LDA), Non-negative Matrix Factorization (NMF).

- Text Summarization:

- Definition: Creating a concise summary of a longer text.

- Techniques: Extractive summarization (selecting important sentences), abstractive summarization (generating new sentences).

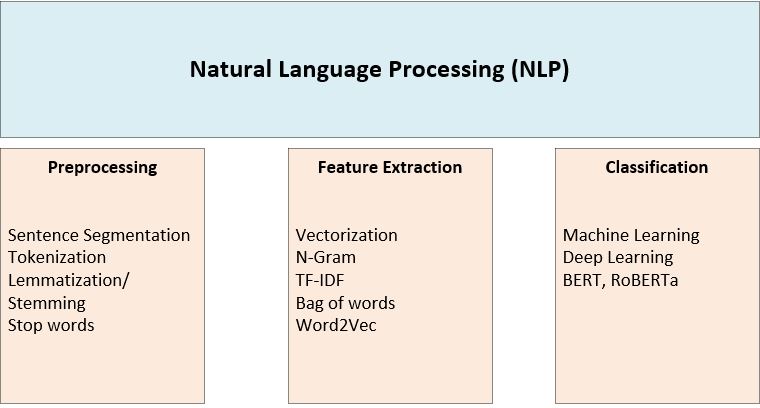

2. The NLP Pipeline (Typical Workflow):

A typical NLP application involves a series of steps:

- Data Collection: Gather the text data you want to process.

- Preprocessing: Clean and prepare the data:

- Remove HTML tags, special characters, etc.

- Tokenize the text.

- Lowercase the text.

- Remove stop words.

- Perform stemming or lemmatization.

- Feature Extraction: Convert the text into numerical features that machine

learning models can understand.

- Bag-of-Words (BoW): Represents a document as a collection of words, ignoring word

order. - TF-IDF (Term Frequency-Inverse Document Frequency): Weights words based on

their frequency in a document and their rarity across the entire corpus. - Word Embeddings: Represent words as dense vectors in a high-dimensional

space, capturing semantic relationships between words (e.g., Word2Vec,

GloVe, FastText).

- Bag-of-Words (BoW): Represents a document as a collection of words, ignoring word

- Model Training (If applicable): Train a machine learning or deep learning

model on the labeled data. - Model Evaluation: Evaluate the performance of the model on a held-out test

set. - Deployment: Deploy the model to a production environment.

- Monitoring: Monitor the model’s performance over time and retrain it as needed.

3. NLP Techniques & Approaches:

- Rule-Based Systems:

- How they work: Based on explicitly defined rules and grammars.

- Advantages: Simple to implement, easy to understand.

- Disadvantages: Limited in scope, difficult to scale to complex language, brittle

(sensitive to variations in language). - Example: A simple chatbot that responds to specific keywords.

- Statistical NLP (Machine Learning):

- How they work: Use statistical models trained on large datasets to learn

patterns in language. - Advantages: More robust than rule-based systems, can handle more complex language,

can learn from data. - Disadvantages: Require large amounts of training data, can be biased by the data.

- Examples:

- Naive Bayes: Simple and fast classifier, often used for sentiment

analysis. - Support Vector Machines (SVMs): Effective for text classification.

- Hidden Markov Models (HMMs): Used for sequence labeling tasks like POS

tagging. - Conditional Random Fields (CRFs): More powerful sequence labeling models.

- Naive Bayes: Simple and fast classifier, often used for sentiment

- How they work: Use statistical models trained on large datasets to learn

- Deep Learning for NLP:

- How they work: Use neural networks with multiple layers to learn complex

representations of language. - Advantages: Can learn very complex patterns, achieve state-of-the-art results on many

NLP tasks. - Disadvantages: Require very large amounts of training data, computationally expensive,

can be difficult to interpret. - Key Architectures:

- Recurrent Neural Networks (RNNs): Designed to process sequential data.

Variants like LSTMs and GRUs are better at handling long-range

dependencies. - Transformers: Revolutionized NLP with the attention mechanism. Models like BERT, GPT,

and T5 have achieved breakthrough performance. - Convolutional Neural Networks (CNNs): Can be used for text classification and

other NLP tasks.

- Recurrent Neural Networks (RNNs): Designed to process sequential data.

- How they work: Use neural networks with multiple layers to learn complex

4. Tools and Libraries:

- NLTK (Natural Language Toolkit): A popular Python library for NLP research

and education. - spaCy: A fast and efficient Python library for production-level NLP.

- Transformers (Hugging Face): A library that provides access to pre-trained

transformer models. - Gensim: A Python library for topic modeling and document similarity analysis.

- Scikit-learn: A general-purpose machine learning library that can be used for many NLP

tasks. - TensorFlow and PyTorch: Deep learning frameworks that can be used to build and

train NLP models.

5. Examples of NLP Applications:

- Chatbots: Conversational agents that can interact with users in natural language.

- Machine Translation: Translating text from one language to another.

- Text Summarization: Automatically generating summaries of news articles or documents.

- Sentiment Analysis: Analyzing customer reviews to understand customer satisfaction.

- Spam Detection: Filtering out unwanted email messages.

- Question Answering: Answering questions posed in natural language.

- Speech Recognition: Converting spoken language into text.

- Text Generation: Generating creative text formats, like poems, code, scripts, musical pieces, email, letters, etc.

6. Key Challenges in NLP:

- Ambiguity: Words and sentences can have multiple meanings.

- Context Dependence: The meaning of a word or sentence can depend on the

context in which it is used. - Sarcasm and Irony: Detecting sarcasm and irony is difficult for computers.

- Idioms and Metaphors: Understanding idioms and metaphors requires knowledge

of cultural context. - Domain Specificity: NLP models trained on one domain may not perform well on

another domain. - Data Bias: Training data can contain biases that can be reflected in the

model’s output.

In summary, NLP is a multifaceted field that combines

computational techniques with linguistic knowledge to enable computers to

process and understand human language. From basic tasks like tokenization to

complex applications like machine translation, NLP is transforming the way we

interact with computers and the world around us. As deep learning continues to

advance, NLP models are becoming increasingly powerful and sophisticated,

opening up new possibilities for automating and enhancing a wide range of language-related

tasks.

computational techniques with linguistic knowledge to enable computers to

process and understand human language. From basic tasks like tokenization to

complex applications like machine translation, NLP is transforming the way we

interact with computers and the world around us. As deep learning continues to

advance, NLP models are becoming increasingly powerful and sophisticated,

opening up new possibilities for automating and enhancing a wide range of language-related

tasks.