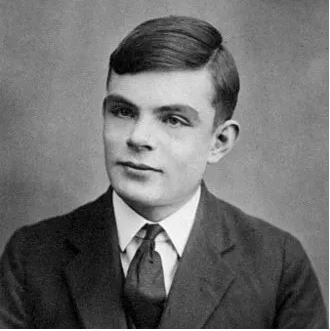

Foundation of AI:

Alan Turing, an British mathematician was highly influential in the development of theoretical computer science, providing a formalization of the concepts of algorithm and computation with the Turing machine, which can be considered a model of a general-purpose computer. Turing is widely considered to be the father of theoretical computer science and artificial intelligence.

During World War II, Turing was a leading participant in wartime code-breaking, particularly that of German ciphers. He made five major advances in the field of cryptanalysis, including specifying the bombe, an electromechanical device used to help decipher German Enigma encrypted signals. The Enigma and Bombe Machines laid the foundations for Machine Learning. According to Turing, a machine that could converse with humans without the humans knowing that it is a machine would win the “imitation game” and could be said to be “intelligent”.

1956, The birth of AI: Computer scientist John McCarthy coined the term “artificial intelligence” for the proposal of 1956 the Dartmouth conference, the first artificial intelligence conference. The objective was to explore ways to make a machine that could reason like a human, was capable of abstract thought, problem-solving and self-improvement. He believed that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

1957: Frank Rosenblatt invented perceptrons, a type of neural network where binary neural units are connected via adjustable weights. He come up with a fairly simple and yet relatively efficient algorithm enabling the perceptron to learn the correct synaptic weights from examples.

1963: John McCarthy started Project MAC, which would later become the MIT Artificial Intelligence Lab. The research would contribute to cognition, computer vision, decision theory, distributed systems, machine learning, multi-agent systems, neural networks, probabilistic inference, and robotics. Later that year, McCarthy and Marvin Minskey launched SAIL: Stanford Artificial Intelligence Laboratory. The research institute would pave the way for operating systems, artificial intelligence, and the theory of computation.

1965: Early NLP computer program ELIZA: Joseph Weizenbaum, a professor at the Massachusetts Institute of Technology (MIT), published one of the most celebrated computer programs ELIZA . The program interacted with a user sitting at an electric typewriter, in English, in the manner of a Rogerian psychotherapist. Weizenbaum called the program “ELIZA” because “like the Eliza of Pygmalion fame, it could be taught to ‘speak’ increasingly well”. To Weizenbaum’s consternation, some users came to believe that Eliza had real understanding and they would unburden themselves in long computer sessions. In fact, the program was something of a trick (albeit a very clever one). All the program did was to decompose the user’s input into its constituent parts of speech, and type them back at the user in a manner that sustained the conversation.

1966:PhD student at Carnegie Mellon University Ross Qullian showed semantic network could use graphs to model the structure and storage of human knowledge. Quillian hoped to explore the meaning of English words through their relationships.

1972: “Domo arigato, Mr. Roboto”: Waseda University in Tokyo created the WABOT-1, or WAseda robOT, the first full-scale intelligent humanoid robot. It could walk, grip objects, speak Japanese, and listen. It could measure how far away it was from certain objects using its vision and auditory senses.

1980: The father of expert systems, computer scientist Edward Feigenbaum developed a computer that makes decisions as a human can. They use rules to reason through knowledge.

1980: Research scientist Kunihiko Fukushima published his work on the neocognitron, a deep convolutional neural network. Convolutional networks recognize visual patterns, and the neocognitron self-organized to recognize images by geometrical similarity of their shapes regardless of position.

1986: Carnegie Mellon University engineers built Navlab, the first autonomous car. The vehicle used five racks of computer hardware, video hardware, a GPS receiver, and a Warp supercomputer. It reached a top speed of 20 mph, or about 32 km/h.

AI Winters: Despite the well funding & enthusiasm over several decades, progress of AI stalled. An early, successes of simple tasks, low quality on complex tasks, and then diminishing returns, disenchantment, and, in some cases, pessimism. The funding started drying up, when unrealistic expectations could not be fulfilled. Governments and corporations started losing faith in AI.

Therefore, from the mid 1970s to the mid 1090s, computer scientists dealt with an acute shortage of funding for AI research. These years became known as the ‘AI Winters’.

Return of AI: In late 1990s, corporation once again became enthusiastic & interested in AI. In 1997, Deep Blue chess machine (IBM) defeats the (then) world chess champion, Garry Kasparov, first official RoboCup football (soccer) match featuring table-top matches with 40 teams of interacting robots and over 5000 spectators. in 1998, Tiger Electronics , Furby is released, and becomes the first successful attempt at producing a type of A.I to reach a domestic environment. In 2002, iRobot’s Roomba autonomously vacuums the floor while navigating and avoiding obstacles. In 2004, NASA’s robotic exploration rovers Spirit and Opportunity autonomously navigate the surface of Mars.

Some AI funding problem rises again when the dotcom bubble burst in the early 2000s, but machine learning continued to progress, because of improvements in processing powers and data storage.

In past 10 years, Google, Amazon, Baidu, Facebook started leveraging machine learning for huge commercial gains. Corporations & government continued to work on VISION, NLP, Robotics, Pattern recognition.