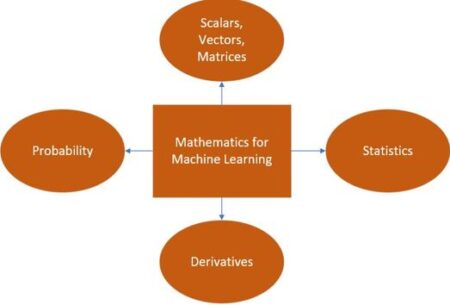

Vectors, Matrices, Derivatives, Probability, and Basic Statistics are core mathematical concepts that are fundamental to machine learning. We’ll look at concrete examples to make it clear.

1. Vectors

- What they are: Ordered lists of numbers, typically represented as columns or rows.

- How they’re used in ML:

- Feature Vectors (Data Points): Each data point is represented as a vector, where each element corresponds to a feature.

- Example: If you’re classifying images of cats and dogs, a feature vector might include pixel intensities, or more complex features extracted from the image. A cat image could be represented as [100, 23, 201, 78, …] where each number corresponds to a feature.

- Example: In a housing price prediction model, a house could be represented as [3, 1500, 2, 1] representing (number of bedrooms, square footage, number of bathrooms, has a garage).

- Model Parameters (Weights and Biases): Many models, particularly linear models and neural networks, store their trainable parameters (weights and biases) in vectors.

- Example: The weights of a linear regression model, might be a vector like [0.5, 0.2, 0.8, -0.1], to match the features mentioned above.

- Embeddings: Used to represent categorical features or words in a dense vector space.

- Example: Words in a text classification model can be represented by word embedding vectors like [0.2, 0.7, -0.1, 0.4, …] allowing the model to understand word meanings and relationships.

- Feature Vectors (Data Points): Each data point is represented as a vector, where each element corresponds to a feature.

- Why are they important? Vectors provide a fundamental way to represent and process data. They’re easy to manipulate mathematically and are the building blocks for matrix operations.

2. Matrices

- What they are: Rectangular arrays of numbers.

- How they’re used in ML:

- Datasets: A dataset can be thought of as a matrix where each row is a data point (feature vector) and each column is a feature.

- Example: If you have 100 houses and 4 features each, the dataset is a 100×4 matrix.

- Transformation Matrices: In linear models, transformations are represented by matrix operations.

- Example: A neural network layer might have a weight matrix which transforms input vectors to different dimensions.

- Covariance Matrices: Represent the covariance (how features vary together) within a dataset.

- Image Representations: Images can be represented as matrices of pixel intensities (for grayscale) or a stack of matrices for color channels (RGB).

- Adjacency Matrices: Used in graph-based machine learning to represent the connections within a graph.

- Datasets: A dataset can be thought of as a matrix where each row is a data point (feature vector) and each column is a feature.

- Why are they important? Matrices enable efficient processing of large datasets and perform linear operations on them, enabling calculations like linear transformations.

3. Derivatives

- What they are: Measure the rate of change of a function.

- How they’re used in ML:

- Optimization (Gradient Descent): In machine learning, we often try to find the model parameters that minimize a loss function (how poorly the model is performing). Derivatives (gradients) indicate the direction of the steepest change of the loss function.

- Example: For a linear regression, the derivative of the mean squared error loss function with respect to the model’s weights guides us towards a better set of weights.

- Example: In neural networks, derivatives are used in backpropagation to adjust the weights and biases by calculating gradients of the loss function with respect to each weight.

- Feature Selection and Sensitivity Analysis: Derivatives can help assess how much the model output changes with a small change in a specific feature. This can inform feature importance and aid in reducing unnecessary inputs.

- Optimization (Gradient Descent): In machine learning, we often try to find the model parameters that minimize a loss function (how poorly the model is performing). Derivatives (gradients) indicate the direction of the steepest change of the loss function.

- Why are they important? Derivatives enable us to train models that minimize errors by iteratively adjusting the model parameters.

4. Probability

- What it is: Measures the likelihood of an event occurring.

- How it’s used in ML:

- Model Building:

- Probabilistic Models: Many models such as Naive Bayes, Bayesian networks explicitly use probability distributions to model data.

- Loss Functions: Some loss functions, like cross-entropy, are derived from probabilistic concepts.

- Uncertainty Quantification: Probabilities allow us to express confidence or uncertainty about model predictions.

- Data Analysis:

- Data Modeling: Probability distributions help summarize the distribution of data.

- Feature Engineering: Probability-based techniques can be used to create features based on the probabilities of events.

- Dealing with Noise: Probabilistic frameworks are ideal for dealing with uncertain or noisy data.

- Evaluation: Some metrics, like AUC (Area under Curve) and classification performance metrics (precision, recall, F1-score) are grounded in probability.

- Specific techniques:

- Bayesian approaches to learning incorporate prior beliefs about model parameters.

- Sampling is used in techniques such as Markov chain Monte Carlo methods (MCMC)

- Model Building:

- Why is it important? Probability gives us a way to reason about uncertainty in our data and our models, as well as to build models that are rooted in probabilistic frameworks.

5. Basic Statistics

- What it is: The science of collecting, analyzing, and interpreting data.

- How it’s used in ML:

- Data Understanding:

- Descriptive Statistics (mean, median, mode, standard deviation): To summarize and understand the basic properties of the data distribution.

- Visualization: Histograms, scatter plots to understand data patterns and outliers.

- Data Preprocessing:

- Normalization and Standardization: Scale or center features.

- Outlier Detection: Identify unusual data points.

- Feature Selection: Using statistical tests to identify relevant features.

- Model Evaluation:

- Metrics (accuracy, precision, recall, F1-score): To evaluate the performance of models.

- Hypothesis testing: To determine whether a model has statistically significant performance.

- Cross-validation: Using statistical techniques to estimate model performance on unseen data.

- Understanding bias and variance: To improve performance, and understand sources of error in models.

- Dealing with Imbalanced Data: Statistical techniques like resampling are used to deal with rare events in classification tasks.

- Data Understanding:

- Why is it important? Statistics provides a toolkit for analyzing and understanding data, preparing it for use in machine learning models, and evaluating the performance of those models.

In Summary:

- Vectors are used to represent data points, parameters, and embeddings.

- Matrices organize datasets and represent transformations.

- Derivatives guide the optimization process and enable learning.

- Probability provides a way to deal with uncertainty and build models that represent it.

- Statistics enable data understanding, preprocessing, and model evaluation.

All of these concepts are interwoven, forming a foundation on which many machine learning models are built. Understanding their purpose within the machine learning context will help you become a proficient practitioner.