While AI has been a buzzword for years, generative AI, particularly the emergence of ChatGPT in 2022, has catapulted it into the global spotlight. This breakthrough has triggered an unprecedented wave of AI development and implementation across industries.

What is Generative AI

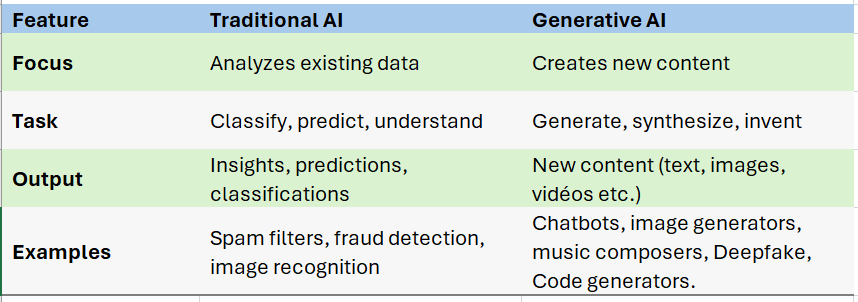

Generative AI, unlike traditional AI that focuses on analysis and prediction, is designed to create. It’s like giving AI a creative spark, enabling it to generate new text, images, audio, video, and even code.

Traditional AI excels at understanding and interpreting existing information, while Generative AI takes it a step further by using that understanding to create something entirely new.

Think of ChatGPT, Gemini, DALL-E, and Stable Diffusion as prime examples of this creative potential.

Key differences from traditional AI:

Examples of Generative AI Models:

- DALL-E 2, Stable Diffusion (Image generation): Can create realistic images based on text descriptions.

- ChatGPT, Gemini (Text generation): Can generate human-like text, write stories, translate languages, and answer questions.

- Codex, GitHub Copilot, AlphaCode (Code generation): Can generate original code, autocomplete code snippets, translate between — programming languages and summarize code functionality.

- Jukebox (Music generation): Can create different genres of music in various styles.

Evolution of Generative AI:

Generative AI experienced a major leap forward in 2014 with the introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow. GANs pitted two networks against each other in a competitive training process, resulting in images that, while initially blurry, became more realistic over time. However, training and scaling GANs proved challenging.

In 2017, Google researchers introduced “Transformers,” a new type of neural network that revolutionized natural language processing. Transformers use “attention mechanisms” to understand context within text, focusing on the relationships between words rather than just processing them in sequence. This breakthrough led to a significant improvement in text translation and paved the way for massive language models like GPTs, which power tools like ChatGPT, GitHub Copilot, and Microsoft Bing. These LLMs are trained on vast amounts of text data, allowing them to generate human-like text with remarkable accuracy.

While transformers have proven effective in computer vision, a new technique called “latent diffusion” has emerged, producing stunning high-resolution images through products like Stability and Midjourney. These diffusion models combine the strengths of both GANs and transformers, incorporating physics-based elements and achieving impressive results while remaining relatively lightweight compared to large language models. Their smaller size and open-source availability have made them a catalyst for innovation, allowing researchers and developers to experiment with new possibilities.

Generative AI Architectures:

The architecture of a generative AI model is its blueprint, dictating how it learns, processes information, and ultimately creates new content. Common architectures include-

- Variational Autoencoders (VAEs),

- Generative Adversarial Networks (GANs),

- Diffusion Models, and

- Transformer-based Models.

The right architecture depends on the specific task and type of data being generated. For example, GANs excel at creating realistic images, while Transformers are often used for text generation. Diffusion models are becoming increasingly popular for both image and text generation, demonstrating their versatility.

Variational autoencoders (VAEs):

Autoencoders are deep learning models that compress (encode) large amounts of unlabeled data into a more compact form and then reconstruct (decode) the data back to its original form. While they can technically generate new content, their primary use lies in data compression and decompression for storage and transfer.

Variational Autoencoders (VAEs), introduced in 2013, are an evolution of autoencoders. They can decode multiple variations of the encoded data, allowing them to generate diverse and novel outputs. By training VAEs to generate variations towards specific goals, they can achieve greater accuracy and fidelity over time. Early applications of VAEs included tasks like anomaly detection in medical imaging and natural language generation.

Generative adversarial networks (GANs):

The important turning point was the development of Generative Adversarial Networks (GANs) by Ian Goodfellow and his colleagues in 2014. GANs presented a powerful, novel way to generate synthetic images that are nearly indistinguishable from real ones.

They work by pitting two neural networks against each other: a generator, which produces synthetic data, and a discriminator, which attempts to distinguish between real and synthetic data. The generator gradually improves through this process, learning to create data that the discriminator cannot distinguish from real data.

Diffusion models:

Also introduced in 2014, diffusion models work by first adding noise to the training data until it’s random and unrecognizable, and then training the algorithm to iteratively diffuse the noise to reveal a desired output.

Diffusion models take more time to train than VAEs or GANs, but ultimately offer finer-grained control over output, particularly for high-quality image generation tool. DALL-E, Open AI’s image-generation tool, is driven by a diffusion model.

Transformers:

Transformers, introduced in Google’s 2017 paper “Attention Is All You Need,” have revolutionized natural language processing (NLP). They utilize “attention” to analyze the relationships between words within a sentence, enabling a more nuanced understanding of context. Unlike traditional methods that process text word by word, transformers can analyze entire sentences at once, significantly boosting efficiency and allowing for the training of much larger models. These advancements have led to the creation of powerful language models like OpenAI’s GPT-3, capable of generating coherent and contextually relevant text.

Transformers are a crucial component of the modern generative AI landscape, powering the creation of realistic images, music, text, and even videos. As we continue to refine these technologies, the potential for generative AI to shape our future is boundless.

Applications of Generative AI:

Generative AI is transforming various industries by enabling the creation of new, original content. Here are some key applications:

- Image Generation: Creating photorealistic images, artistic styles, and even medical images.

- Text Generation: Writing stories, articles, poems, code, and engaging in natural-sounding conversations.

- Music Generation: Composing original music scores, exploring different genres, and personalizing soundtracks.

- Video Generation: Producing realistic videos, creating deepfakes for entertainment, and generating marketing materials.

- Product Design: Generating innovative product designs, optimizing existing products for efficiency, and creating 3D models for visualization.

- Drug Discovery: Generating new drug candidates, optimizing existing molecules for effectiveness and safety.

- Architecture & Construction: Designing buildings, generating 3D models for visualization and planning, and creating virtual tours.

- Scientific Simulation: Simulating complex physical phenomena for weather forecasting, climate modeling, and scientific research.

- Data Augmentation: Creating synthetic data for training AI models and increasing the diversity of training datasets.

- Personalized Experiences: Generating tailored content and recommendations, personalizing entertainment and educational.

- Art & Creativity: Exploring new artistic styles and expressions, enabling AI-assisted art creation, and generating novel art forms.

Challenges, Limitations and Risks:

- Data Bias: Generative AI models learn from existing data, which can perpetuate societal biases (e.g., racial, gender, or cultural).

- Fake Content: Generative AI can create realistic but false images, text, audio, and video, fueling misinformation.

- Manipulation: Deepfakes can be used for malicious purposes like defamation or political manipulation.

- Content Ownership: Who owns the rights to generated content?

- Legal Disputes: Potential for copyright infringement and legal battles.

- Data Privacy: Protecting sensitive information used to train generative models.

- Malicious Use: Potential for misuse in cyberattacks, identity theft, and other criminal activities.

- Job Displacement: Generative AI could automate tasks traditionally done by humans, potentially leading to job losses.

- Creative Industries: Impact on artistic professions and the role of human creativity.

Generative AI is rapidly evolving, opening up countless possibilities for innovation across industries. Its impact will continue to grow, transforming how we create, design, and understand the world around us.